Lightweight tools for integrative single-cell data analysis

TL;DR. We develop scalable and memory-efficient tools for single-cell data analysis.

There are already many tools.

Ever since single-cell technology was introduced to the world, every year, every month, we see more tools. See scRNA-tools, for example. Single-cell data analysis often involves many steps of data processing, quantification, and statistical inference. The technology is imperfect, and each step deserves careful consideration of different types of approaches. We, bioinformaticians, test a different combination of small tools and tailor a pipeline that works best for each project. Perhaps, there is no master algorithm, and the competitions among computational biologists are indeed fierce.

The history of genomics repeats itself. For a large part of current single-cell data analysis, we probably see what had happened in RNA-seq, even gene expression microarray analysis. Once we knew how to normalize scRNA-seq data, we would like to know how to test differentially-expressed genes. Then, we would want to build more audacious models, such as gene regulatory networks. Are there any points that we may contribute from a unique perspective? Can existing tools address all the challenges of single-cell data analysis, e.g., “eleven grand challenges in single-cell data science”? I believe not. We know there has always been enough room for innovation and creativity.

Okay, that sounds meek and quite passive. Why do we dare to jump into the battlefield? We are not a software-engineering lab, but we can become impatient enough to feel the need for a better tool. Moreover, we want to understand why and how a certain method works, and when that method doesn’t work as expected.

The underlying data matrix is sparse, really sparse, but most methods make it dense.

Yes, the data matrix is sparse, but it will easily blow up memory if you fill in all the zeros. Our first impression was that memory overhead was never an issue for most people. Lots of methods focus on replacing the zeros with some other fractional values. Not undermining the validity of imputation methods, we worried more about the scalability of the strategy. Can such a strategy work for millions of cells with billions of non-zero elements? Most methods are tested on a rather small data set, including thousands of cells. If a data matrix is sparse, why not we build a method, taking advantage of the sparsity? What if most zero values did not occur by missingness? What if droplet scRNA-seq is not zero-inflated?

We develop a scalable approach to index millions of cells for fast random access.

We like to work on a sparse data matrix formatted as

a list of triplets, and compressed by gzip or bgzip. The rows correspond to features/genes; the columns correspond to UMI barcodes/cells. We assume the triplets (row, column, value) are sorted by the columns (cells) in ascending order. To mark the line where the columns change, we modify the indexing scheme of

TABIX and create a separate .index file to store all the random access points.

We implement commonly-used matrix operations to manipulate data more swiftly. In some cases, it is worthwhile to re-implement low-level functions, since we may not need all the steps implemented in a general-purpose library. We were just too impatient to wait a long I/O time until we finish reading a full 10x matrix (.mtx.gz) using either scipy.io.mmread in Python, or Matrix::readMM in R. As the size of the 10x matrix increase more and more, we also discovered there is a numerical limit for R’s Matrix::readMM (less than two billions non-zero elements) and had to face a similar issue in a low-memory machine, such as a modest 8Gb laptop. So, we did the dirty job of C++ implementation. Combined with the indexing scheme, now we can efficiently take a subset of a large matrix stored in a file, not worrying about memory capacity, minimizing the overhead of character parsing and I/O.

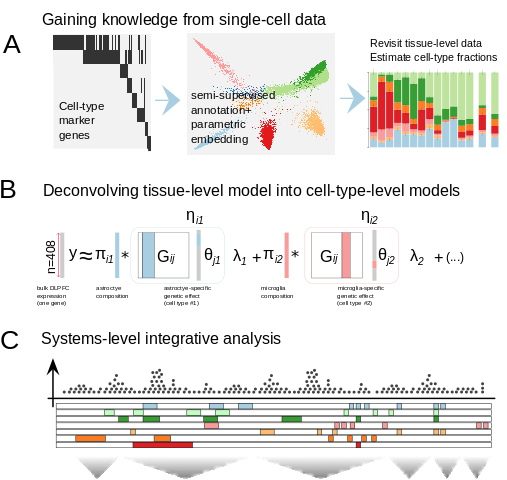

We want to focus on integrative data analysis across multiple data types and modalities

With these tools, including the ones under development, we want to do science. We want others to do science using our tools. We will continuously update a bit more complicated routines for convenience, including cell type annotation, data aggregation, cell type deconvolution, batch-balancing k-nearest neighborhood, etc.